|

I am a PhD student at the University of Washington advised by Professor Abhishek Gupta. My research is graciously supported by the NSF Graduate Research Fellowship. I'm broadly interested in embodied AI and building intelligent robots. I am currently interning at Microsoft Research. Previously, I did my undergrad at UC Berkeley where I worked with Professors Sergey Levine and Kuan Fang in the Berkeley Artificial Intelligence Research (BAIR) Lab.

|

|

|

|

|

Patrick Yin*, Tyler Westenbroek*, Simran Bagaria, Kevin Huang, Ching-An Cheng, Andrey Kolobov, Abhishek Gupta (* indicates equal contribution) ICLR 2025 project page / arXiv We propose Simulation-Guided Fine-Tuning (SGFT) - a simple, general sim2real framework which extracts structured exploration priors from simulation to accelerate real world RL. |

|

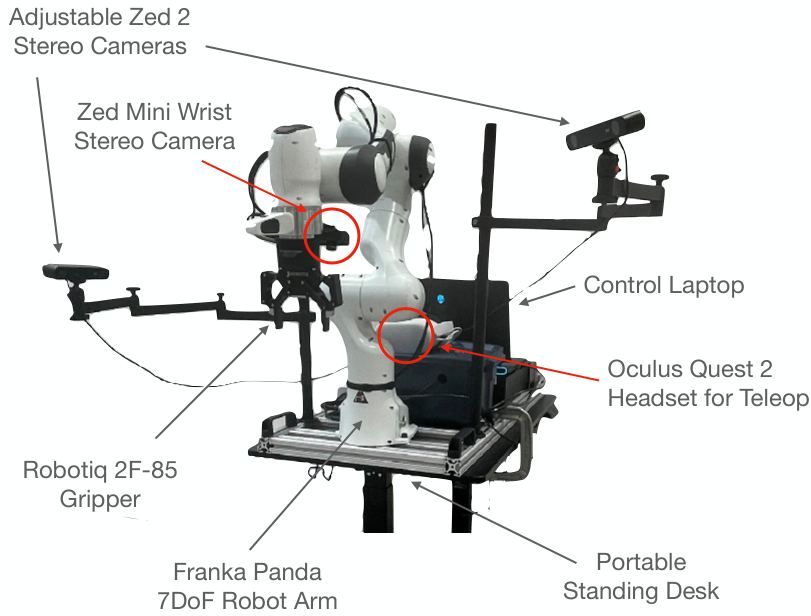

Alexander Khazatsky*, Karl Pertsch*, ..., Patrick Yin, ..., Sergey Levine, Chelsea Finn RSS 2024 project page / arXiv A large, diverse robot manipulation dataset with 76k demonstration trajectories. |

|

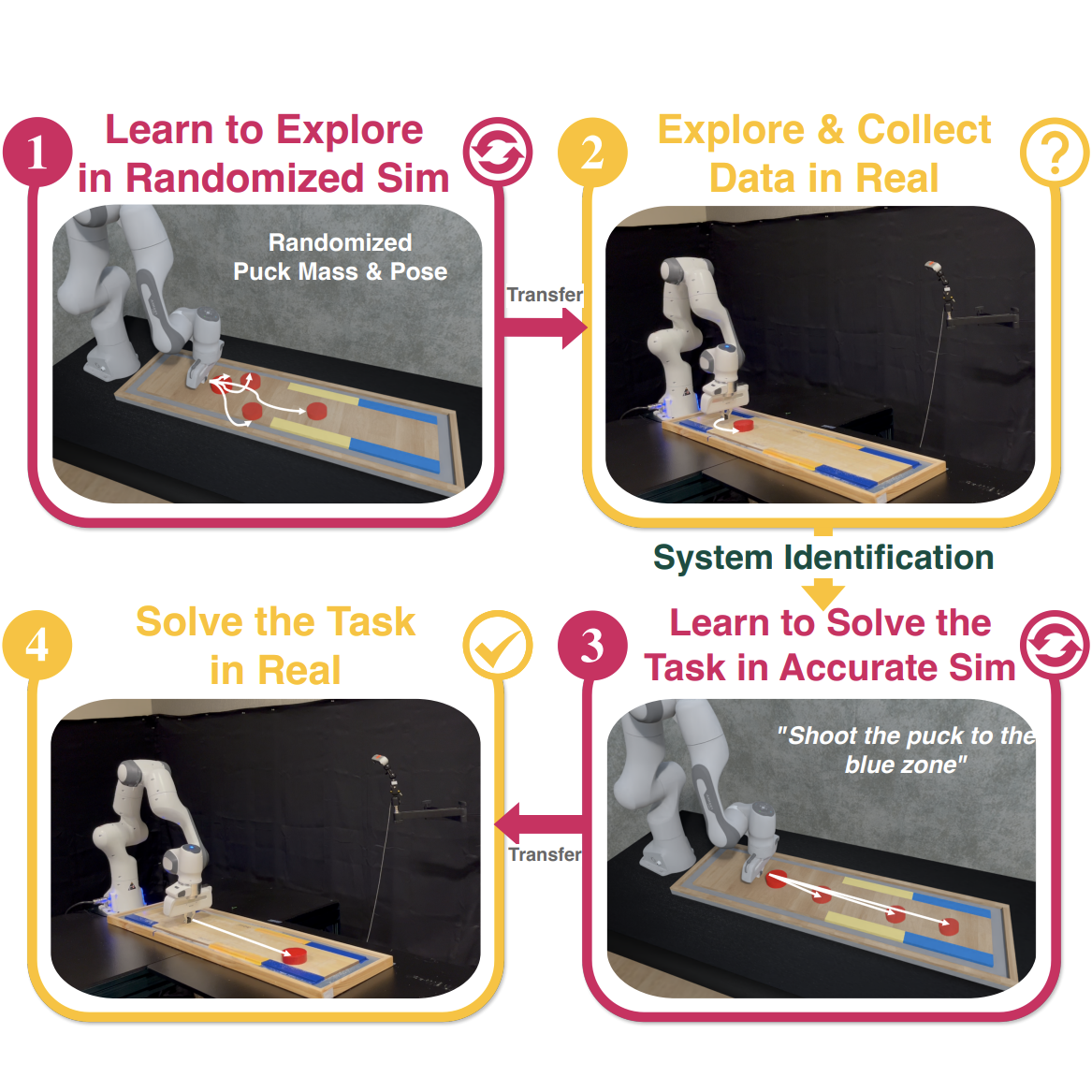

Marius Memmel, Chuning Zhu, Andrew Wagenmaker, Patrick Yin, Dieter Fox, Abhishek Gupta ICLR 2024 (Oral Presentation) project page / arXiv We propose a learning system that can leverage a small amount of real-world data to autonomously refine a simulation model, enabling sim-to-real transfer for real-world robotic manipulation tasks. |

|

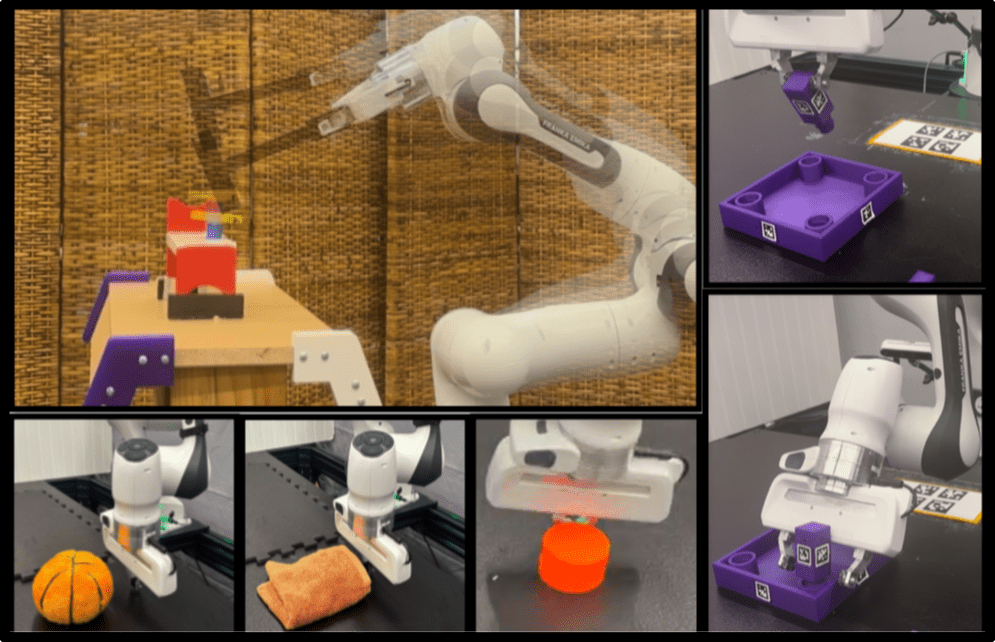

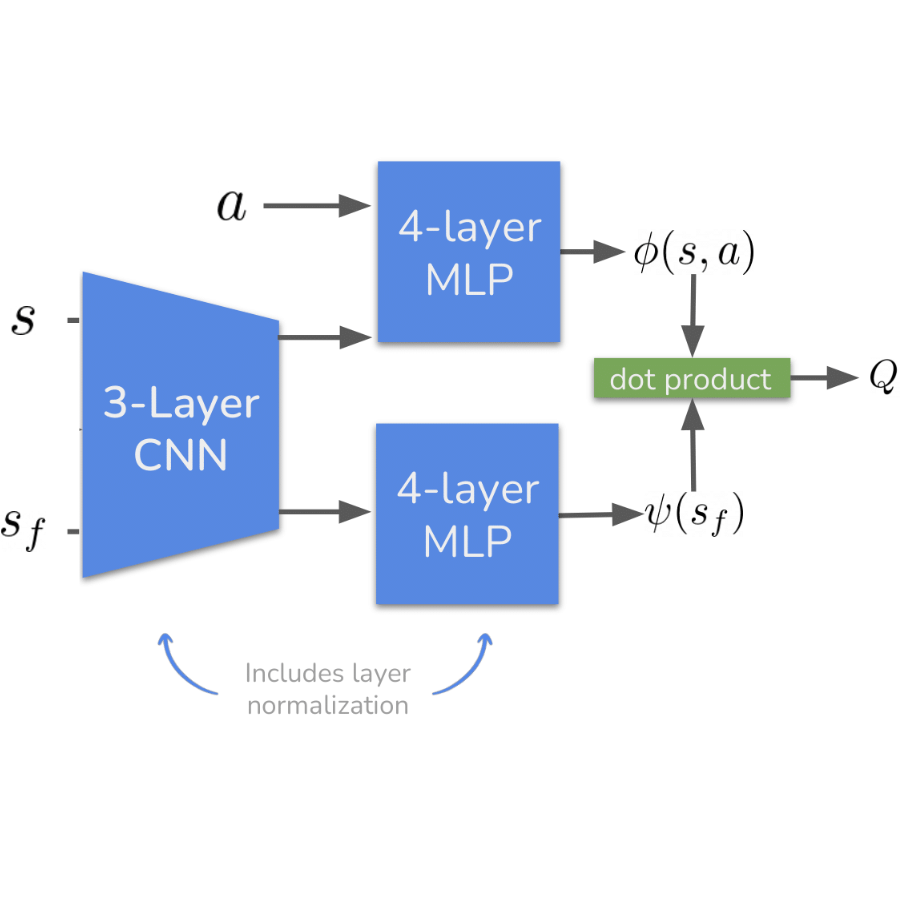

Chongyi Zheng, Benjamin Eysenbach, Homer Rich Walke, Patrick Yin, Kuan Fang, Ruslan Salakhutdinov, Sergey Levine ICLR 2024 (Spotlight Talk) project page / arXiv We discover that a shallow and wide architecture can boost the performance of contrastive RL approaches on simulated benchmarks. Additionally, we demonstrate that contrastive approaches can solve real-world robotic manipulation tasks. |

|

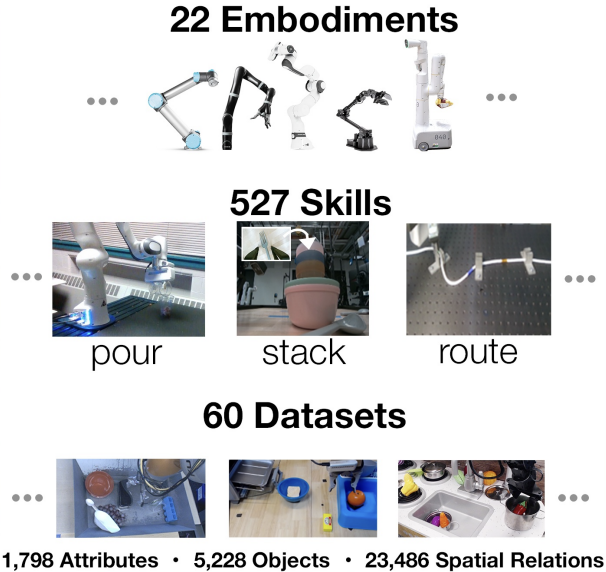

Open X-Embodiment Collaboration, ..., Patrick Yin, ... ICRA 2024 (Best Paper) project page / arXiv A large, open-source real robot dataset with 1M+ real robot trajectories spanning 22 robot embodiments, from single robot arms to bi-manual robots and quadrupeds. |

|

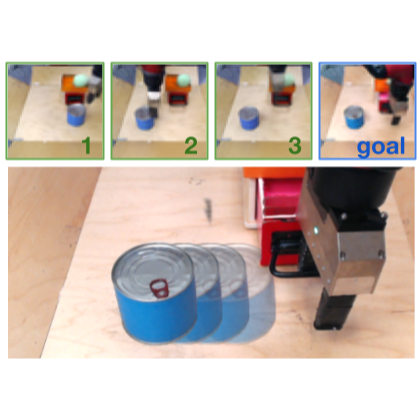

Kuan Fang, Patrick Yin, Ashvin Nair, Homer Rich Walke, Gengchan Yan, Sergey Levine CoRL 2022 (Oral Presentation) project page / arXiv We propose Fine-Tuning with Lossy Affordance Planner (FLAP), a framework that leverages diverse offline data for learning representations, goal-conditioned policies, and affordance models that enable rapid fine-tuning to new tasks in target scenes. |

|

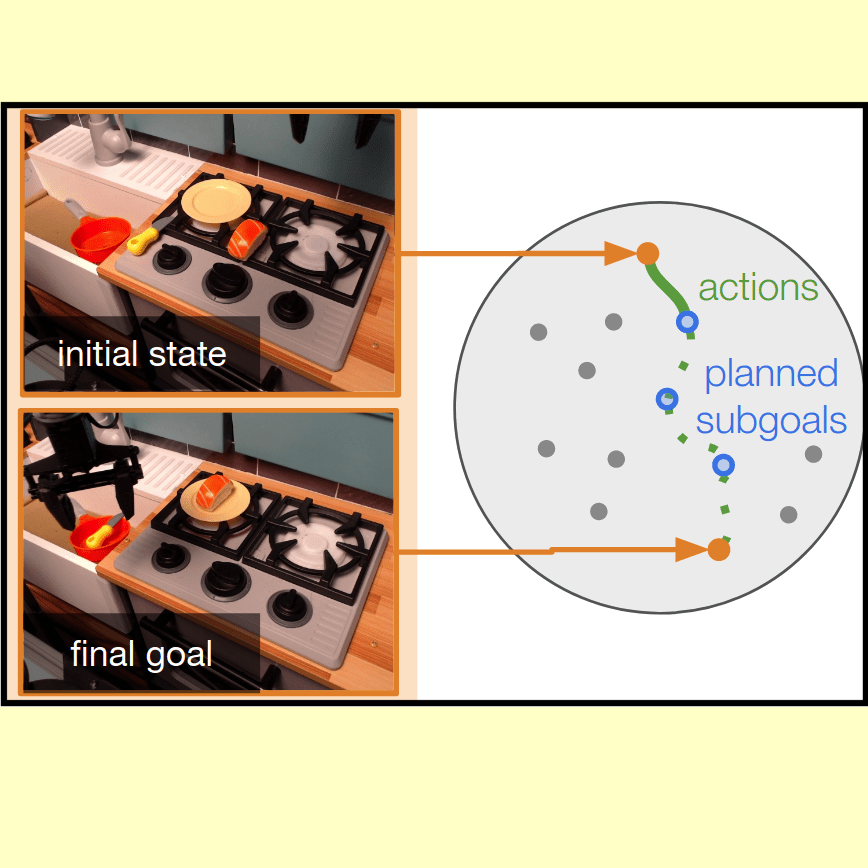

Kuan Fang*, Patrick Yin*, Ashvin Nair, Sergey Levine (* indicates equal contribution) IROS 2022 project page / arXiv We propose Planning to Practice (PTP), a method which makes it practical to train goal-conditioned policies for long-horizon tasks. |

|

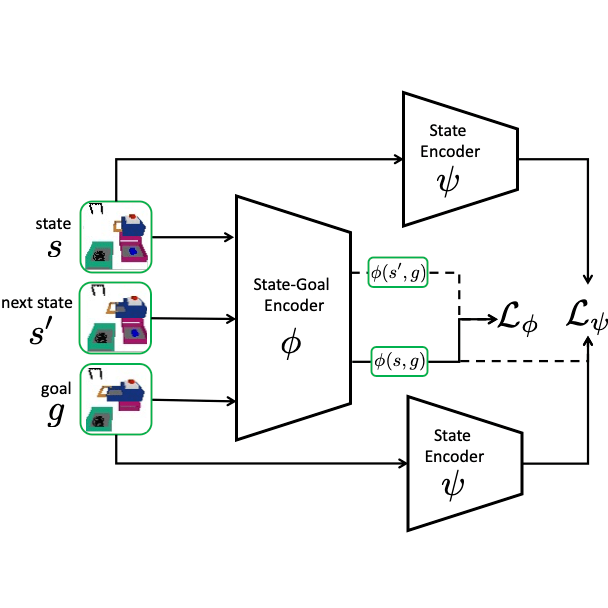

Philippe Hansen-Estruch, Amy Zhang, Ashvin Nair, Patrick Yin, Sergey Levine ICML 2022 project page / arXiv We propose a new form of state abstraction called goal-conditioned bisimulation that captures functional equivariance, allowing for the reuse of skills to achieve new goals in goal-conditioned reinforcement learning. |

|

|

|

Notes that I took on machine learning, math, and books during undergrad

Coursework that I took as an undergrad Coding projects from when I was first learning to code :) |

Website template from Jon Barron.